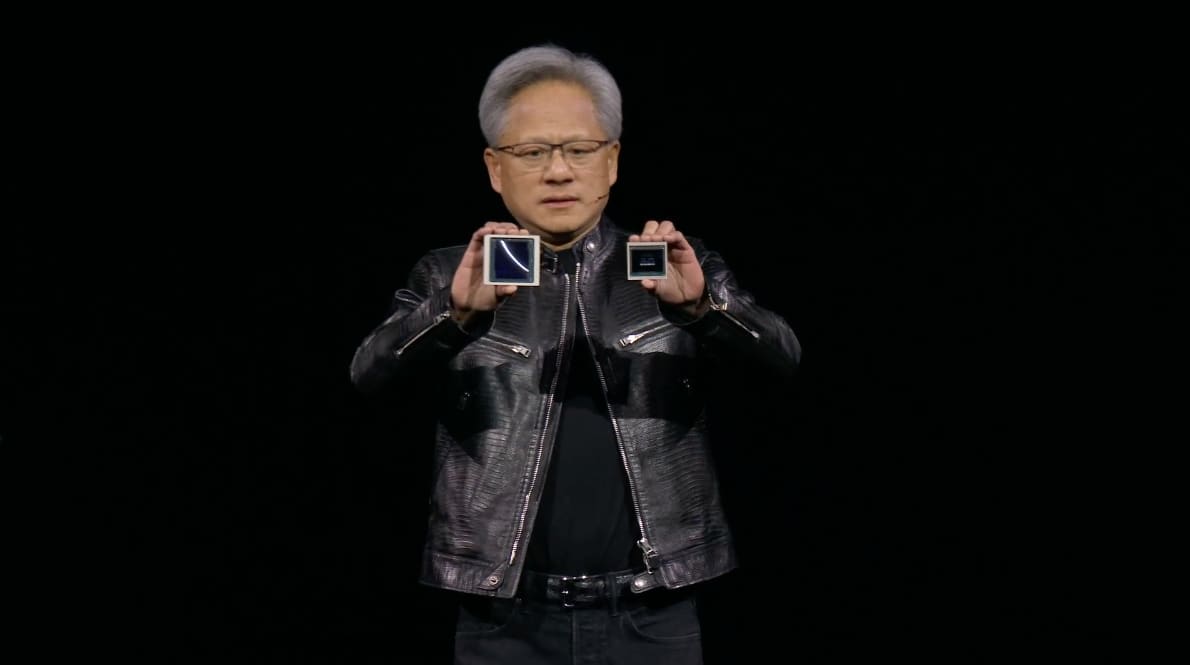

Nvidia CEO Jensen Huang delivers a keynote speech during the Nvidia GTC artificial intelligence conference at the SAP Center on March 18, 2024 in San Jose, California.

Justin Sullivan | Good pictures

A new generation of AI graphics processors is named Blackwell. The first Blackwell chip is called the GB200 and will ship later this year. Nvidia is wooing its customers with more powerful chips to spur new orders. Enterprises and software makers, for example, are still scrambling to get their hands on current-generation “Hopper” H100s and similar chips.

“Hopper is fantastic, but we need bigger GPUs,” Nvidia CEO Jensen Huang said Monday at the company's developer conference in California.

The company introduced revenue-generating software called NIM, which makes it easier to use AI, and gives customers another reason to stick with Nvidia chips in a field of growing competitors.

Nvidia executives say the company is becoming a mercenary chip provider and a platform provider like Microsoft or Apple, where other companies can develop software.

“Blackwell is not a chip, it's a site name,” Huang said.

“The marketable commercial product GPU and the software were all about helping people use the GPU in different ways,” Nvidia company VP Manuvir Das said in an interview. “Of course, we still do that. But what's really changed is that we now have a commercial software business.”

Das said Nvidia's new software will make it easier to run programs on any of Nvidia's GPUs.

“If you're a developer, you've got an interesting model that you want people to adopt, and if you put it on a NIM, we'll make sure it runs on all our GPUs, so you reach a lot of people,” Das said.

Nvidia's GB200 Grace Blackwell superchip, two P200 graphics processors and an ARM-based central processor.

Every two years Nvidia updates its GPU architecture, unlocking a huge improvement in performance. Many of the AI models released in the past year have been trained on the company's Hopper framework — used by chips like the H100 — which was announced in 2022.

Blackwell-based processors like the GB200 offer a huge performance boost for AI companies, with up to 20 petaflops in AI performance versus 4 petaflops for the H100, Nvidia says. The extra processing power will help AI companies train larger and more complex models, Nvidia said.

One of ChatGPT's key technologies is what Nvidia calls a “Transformer Engine” on the chip, a Transformer engine built to run Transformers-based AI.

The Blackwell GPU is larger and combines two separately manufactured dies on a single chip manufactured by TSMC. It's available as a full server called the GB200 NVLink 2, incorporating 72 Blackwell GPUs and other Nvidia components designed to train AI models.

Nvidia CEO Jensen Huang compares the size of the new “Blackwell” chip to the current “Hopper” H100 chip at the company's developer conference in San Jose, California.

Nvidia

Amazon, Google, Microsoft and Oracle will sell access to GB200 through cloud services. The GB200 combines two B200 Blackwell GPUs with an ARM-based Grace CPU. Amazon Web Services will build a server cluster with 20,000 GB200 chips, Nvidia said.

Nvidia said the system can sort a 27 trillion parameter model. This is much larger than the largest models such as GPT-4, which is said to have 1.7 trillion parameters. Many artificial intelligence researchers rely on larger models with more parameters and data New skills can be unlocked.

Nvidia has not provided a cost for the new GB200 or the systems it will be used on. Nvidia's Hopper-based H100 costs between $25,000 and $40,000 per chip, with full systems costing up to $200,000, according to analyst estimates.

Nvidia will also sell B200 graphics processors as part of a complete system.

Nvidia announced the addition of a new product called NIM to its Nvidia Enterprise Software subscription.

NIM makes it easy to use older Nvidia GPUs for inference or the process of running AI software, and will allow companies to continue using the hundreds of millions of Nvidia GPUs they already own. Inference requires less computational power than initial training of a new AI model. Instead of accessing AI results as a service from companies like OpenAI, NIM enables companies that want to run their own AI models.

The strategy is to get customers buying Nvidia-based servers to register with Nvidia, which costs $4,500 per GPU per year to license.

Nvidia works with AI companies like Microsoft or Hugging Face to ensure their AI models run on all compatible Nvidia chips. Then, using a NIM, developers can efficiently run the model on their own servers or cloud-based Nvidia servers without a lengthy configuration process.

“In my code, I was calling OpenAI, and instead I'd change one line of code to this NIM I got from Nvidia,” Das said.

Nvidia says the software will enable AI to run on GPU-equipped laptops instead of servers in the cloud.